Publications

Conferences

Nishizawa, T., Tanaka, K., Hirata, A., Yamaguchi, S., Feng, Q. , Hamanaka, M., & Morishima, S. (2025). SyncViolinist: Music-Oriented Violin Motion Generation Based on Bowing and Fingering. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision.

Higasa, T., Tanaka, K., Feng, Q. , & Morishima, S. (2024). Keep Eyes on the Sentence: An Interactive Sentence Simplification System for English Learners Based on Eye Tracking and Large Language Models. CHI EA '24: Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems.

Feng, Q. , & Morishima, S. (2024). Projection-Based Monocular Depth Prediction for 360 Images with Scale Awareness. Visual Computing Symposium 2024.

Inoue, R., Feng, Q., & Morishima, S. (2024). Non-Dominant Hand Skill Acquisition with Inverted Visual Feedback in a Mixed Reality Environment. Visual Computing Symposium 2024.

Feng, Q. , Shum, H. P., & Morishima, S. (2023). Enhancing perception and immersion in pre-captured environments through learning-based eye height adaptation. 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR).

Feng, Q. , Shum, H. P., & Morishima, S. (2023). Learning Omnidirectional Depth Estimation from Internet Videos. In Proceedings of the 26th Meeting on Image Recognition and Understanding.

Higasa, T., Tanaka, K., Feng, Q., & Morishima, S. (2023). Gaze-Driven Sentence Simplification for Language Learners: Enhancing Comprehension and Readability. The 25th International Conference on Multimodal Interaction (ICMI).

Kashiwagi, S., Tanaka, K., Feng, Q., & Morishima, S. (2023). Improving the Gap in Visual Speech Recognition Between Normal and Silent Speech Based on Metric Learning. INTERSPEECH 2023.

Oshima, R., Shinagawa, S., Tsunashima, H., Feng, Q., & Morishima, S. (2023). Pointing out Human Answer Mistakes in a Goal-Oriented Visual Dialogue. ICCV '23 Workshop and Challenge on Vision and Language Algorithmic Reasoning (ICCVW).

Feng, Q. , Shum, H. P., & Morishima, S. (2022). 360 Depth Estimation in the Wild-The Depth360 Dataset and the SegFuse Network. In 2022 IEEE conference on virtual reality and 3D user interfaces (VR). IEEE.

Feng, Q. , Shum, H. P., & Morishima, S. (2021). Bi-projection-based Foreground-aware Omnidirectional Depth Prediction. Visual Computing Symposium 2021.

Feng, Q. , Shum, H. P., Shimamura, R., & Morishima, S. (2020). Foreground-aware Dense Depth Estimation for 360 Images. International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision 2020.

Shimamura, R., Feng, Q., Koyama, Y., Nakatsuka, T., Fukayama, S., Hamasaki, M., ... & Morishima, S. (2020). Audio–visual object removal in 360-degree videos. Computer Graphics International 2020.

Feng, Q. , Shum, H. P., & Morishima, S. (2018, November). Resolving occlusion for 3D object manipulation with hands in mixed reality. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology.

Feng, Q. , Nozawa, T., Shum, H. P., & Morishima, S. (2018, August). Occlusion for 3D Object Manipulation with Hands in Augmented Reality. In Proceedings of The 21st Meeting on Image Recognition and Understanding.

Journals

Nozawa, N., Shum, H. P., Feng, Q., Ho, E. S., & Morishima, S. (2021). 3D car shape reconstruction from a contour sketch using GAN and lazy learning. The Visual Computer, 1-14.

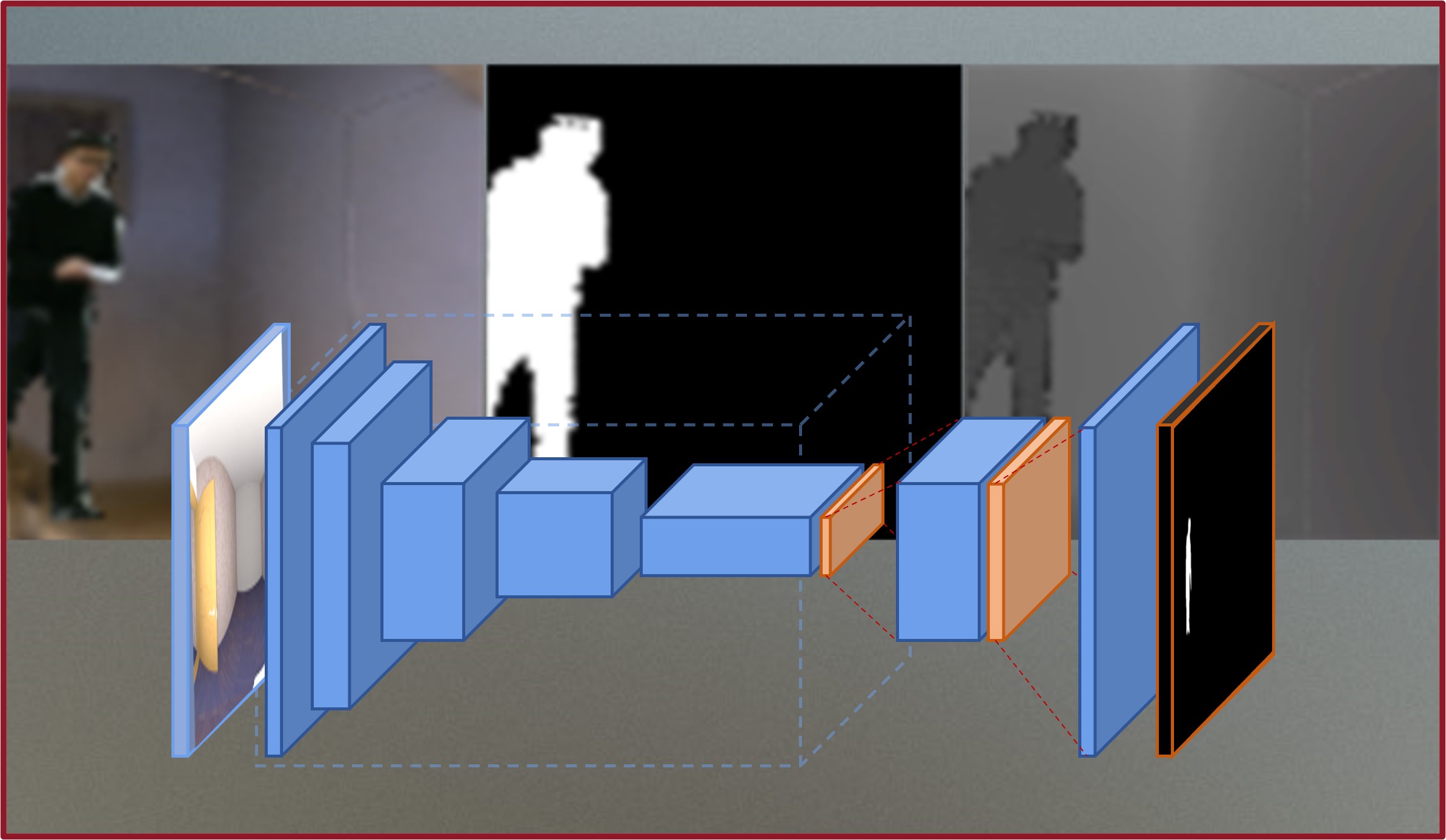

Feng, Q. , Shum, H. P., & Morishima, S. (2020). Resolving hand‐object occlusion for mixed reality with joint deep learning and model optimization.

Computer Animation and Virtual Worlds, 31(4-5), e1956.

Feng, Q. , Shum, H. P., Shimamura, R., & Morishima, S. (2020). Foreground-aware Dense Depth Estimation for 360 Images., Journal of WSCG, 28(1-2), 79-88.

Shimamura, R., Feng, Q., Koyama, Y., Nakatsuka, T., Fukayama, S., Hamasaki, M., ... & Morishima, S. (2020). Audio–visual object removal in 360-degree videos. The Visual Computer, 36(10), 2117-2128.