研究方向

我的研究方向主要是通过基于深度学习的方法解决不同的计算机图形和计算机视觉问题。 除此之外,我也热衷于活用CG/CV的方法来解决一些当前虚拟现实(VR)和增强现实(AR)应用中存在的一系列实际问题。

图像处理和机器视觉 Ph.D.

助理教授

早稻田大学, 森岛研究室

我于2022年9月获得图像处理专业的博士学位。

现在在位于日本东京的早稻田大学

先进理工学部担任助理教授(Assistant Professor)职位。

目前隶属于森岛研究室进行图像处理和机器视觉相关的研究工作。

我的研究方向主要是通过基于深度学习的方法解决不同的计算机图形和计算机视觉问题。 除此之外,我也热衷于活用CG/CV的方法来解决一些当前虚拟现实(VR)和增强现实(AR)应用中存在的一系列实际问题。

中文 - 母语水平 英语 - 熟练掌握 (GRE得分325)

日语 - 熟练掌握 (JLPT N1) 法语 - 简单对话 (CEFR A2)

熟练使用: Python, HTML/CSS, SQL 较为熟悉: C++, C#, Java, Javascript

PyTorch, Torchvision, Tensorflow, OpenCV Git, SharePoint WordPress, Unity3D, Arduino

对象分类, 图像语义分割, 深度预测, 场景重建, 动作预测, 画风转换, 合成训练集

Microsoft Office 365 (Access, SharePoint), Adobe Creative Cloud (Lightroom Classic, Photoshop, Illustrator, After Effects, Premiere Pro, Audition, InDesign), Ableton, Vocaloid, Blender

Feng, Q. , Kayukawa, S., Wang, X., Takagi, H., & Asagawa, C. (Under Review). STAMP: Scale-aware TActile-Map Printing for Accessible Spatial Understanding and Navigation. In 2026 CHI Conference on Human Factors in Computing Systems.

Feng, Q. , Kayukawa, S., Wang, X., Takagi, H., & Asagawa, C. (2025). A Scale-Aware Method for Generating 3D-Printable Tactile Maps. The 33rd Workshop on Interactive Systems and Software (Japan).

Iwakata, S., Oshima, R., Tsunashima, H., Feng, Q., Kataoka, H., & Morishima, S. (2025). Viewpoint-dependent 3D Visual Grounding for Mobile Robots. In 2025 IEEE International Conference on Image Processing.

Inoue, R., Feng, Q. , & Morishima, S. (2025). SynchroDexterity: Rapid Non-Dominant Hand Skill Acquisition with Synchronized Guidance in Mixed Reality. In 2025 IEEE conference on virtual reality and 3D user interfaces (VR). IEEE.

Nishizawa, T., Tanaka, K., Hirata, A., Yamaguchi, S., Feng, Q. , Hamanaka, M., & Morishima, S. (2025). SyncViolinist: Music-Oriented Violin Motion Generation Based on Bowing and Fingering. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision.

Higasa, T., Tanaka, K., Feng, Q. , & Morishima, S. (2024). Keep Eyes on the Sentence: An Interactive Sentence Simplification System for English Learners Based on Eye Tracking and Large Language Models. CHI EA '24: Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems.

Feng, Q. , & Morishima, S. (2024). Projection-Based Monocular Depth Prediction for 360 Images with Scale Awareness. Visual Computing Symposium 2024.

Inoue, R., Feng, Q., & Morishima, S. (2024). Non-Dominant Hand Skill Acquisition with Inverted Visual Feedback in a Mixed Reality Environment. Visual Computing Symposium 2024.

Feng, Q. , Shum, H. P., & Morishima, S. (2023). Enhancing perception and immersion in pre-captured environments through learning-based eye height adaptation. 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR).

Feng, Q. , Shum, H. P., & Morishima, S. (2023). Learning Omnidirectional Depth Estimation from Internet Videos. In Proceedings of the 26th Meeting on Image Recognition and Understanding.

Higasa, T., Tanaka, K., Feng, Q., & Morishima, S. (2023). Gaze-Driven Sentence Simplification for Language Learners: Enhancing Comprehension and Readability. The 25th International Conference on Multimodal Interaction (ICMI).

Kashiwagi, S., Tanaka, K., Feng, Q., & Morishima, S. (2023). Improving the Gap in Visual Speech Recognition Between Normal and Silent Speech Based on Metric Learning. INTERSPEECH 2023.

Oshima, R., Shinagawa, S., Tsunashima, H., Feng, Q., & Morishima, S. (2023). Pointing out Human Answer Mistakes in a Goal-Oriented Visual Dialogue. ICCV '23 Workshop and Challenge on Vision and Language Algorithmic Reasoning (ICCVW).

Feng, Q. , Shum, H. P., & Morishima, S. (2022). 360 Depth Estimation in the Wild-The Depth360 Dataset and the SegFuse Network. In 2022 IEEE conference on virtual reality and 3D user interfaces (VR). IEEE.

Feng, Q. , Shum, H. P., & Morishima, S. (2021). Bi-projection-based Foreground-aware Omnidirectional Depth Prediction. Visual Computing Symposium 2021.

Feng, Q. , Shum, H. P., Shimamura, R., & Morishima, S. (2020). Foreground-aware Dense Depth Estimation for 360 Images. International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision 2020.

Shimamura, R., Feng, Q., Koyama, Y., Nakatsuka, T., Fukayama, S., Hamasaki, M., ... & Morishima, S. (2020). Audio–visual object removal in 360-degree videos. Computer Graphics International 2020.

Feng, Q. , Shum, H. P., & Morishima, S. (2018, November). Resolving occlusion for 3D object manipulation with hands in mixed reality. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology.

Feng, Q. , Nozawa, T., Shum, H. P., & Morishima, S. (2018, August). Occlusion for 3D Object Manipulation with Hands in Augmented Reality. In Proceedings of The 21st Meeting on Image Recognition and Understanding.

Nozawa, N., Shum, H. P., Feng, Q., Ho, E. S., & Morishima, S. (2021). 3D car shape reconstruction from a contour sketch using GAN and lazy learning. The Visual Computer, 1-14.

Feng, Q. , Shum, H. P., & Morishima, S. (2020). Resolving hand‐object occlusion for mixed reality with joint deep learning and model optimization. Computer Animation and Virtual Worlds, 31(4-5), e1956.

Feng, Q. , Shum, H. P., Shimamura, R., & Morishima, S. (2020). Foreground-aware Dense Depth Estimation for 360 Images., Journal of WSCG, 28(1-2), 79-88.

Shimamura, R., Feng, Q., Koyama, Y., Nakatsuka, T., Fukayama, S., Hamasaki, M., ... & Morishima, S. (2020). Audio–visual object removal in 360-degree videos. The Visual Computer, 36(10), 2117-2128.

发布于Github上的开源项目:

我们提出的SyncViolinist是一个多阶段端到端框架,可完全通过音频输入生成同步的小提琴演奏动作。它成功克服了同时捕捉全局和细微演奏特征的难题。

我们首先提出了一种从丰富的互联网360度全景视频中生成大量匹配的颜色/深度训练数据的方法。 其次我们提出了一个多任务网络来学习360度图像的单眼深度预测。实验结果高效且准确。

我们首先通过图像处理的方法来获取了不同样式的前景颜色/深度的训练数据。用新颖的方法将其合成至现有的360度图像的训练集后, 我们继而提出了一个多任务辅助网络和损失函数,成功克服了现有方法对前景对象预测结果较差的问题。

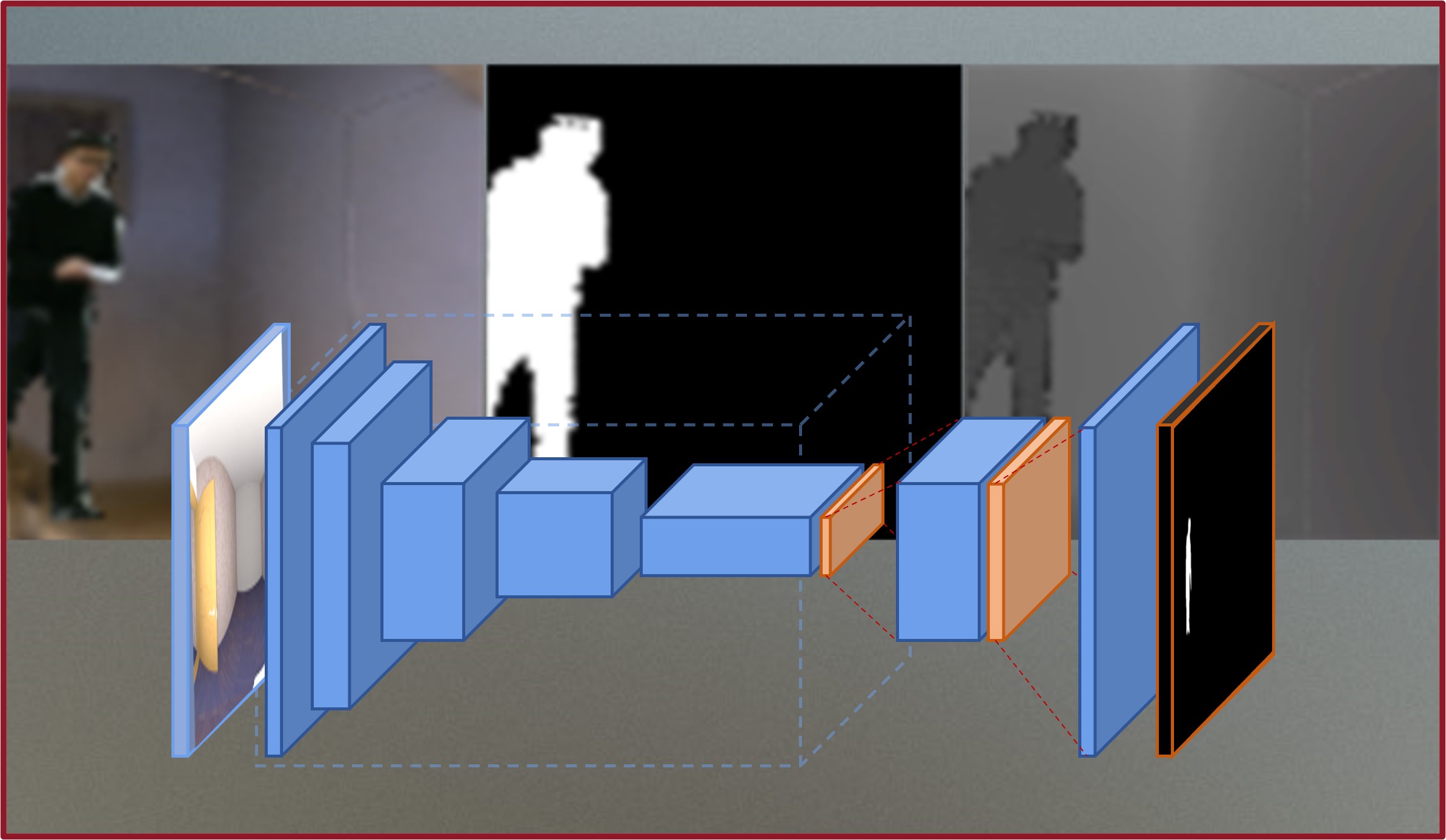

为了解决混合现实(MR)中常见的由于场景结构未知而导致的手与物体间的遮蔽问题,我们通过CycleGAN生成了大规模 真实且准确的颜色/深度/姿势训练集,然后提出了一个实时的基于姿势追踪和语义分割的多任务网络。使用者实验和量化分析都获得了很好的结果。

电话: +81-3-5286-3510

传真: +81-3-5286-3510

地址: 55N406 3-4-1 Okubo, Shinjuku-ku, Tokyo, 169-0072

邮件: fengqi[at]ruri.waseda.jp